这篇文章给大家分享的是有关Eclipse调试HBase程序需要注意什么的内容。小编觉得挺实用的,因此分享给大家做个参考,一起跟随小编过来看看吧。

1.HBase所需jar的引入

从网上粘贴的代码,放在编辑器里会提示所需的包不存在。这个时候建议给HBase做成用户库,以便下次再使用的话直接使用。此库里的jar包包括: hbase下的hbase.jar,hbase-test.jar以及lib目录下的所有。

2.Eclipse调试时报:unknown host: Master.Hadoop错误

这个错误很明显,就是找不到Master.Hadoop主机,解决也挺简单,就是修改windows下的host文件。

一般host的位置在:C:\WINDOWS\system32\drivers\etc\hosts

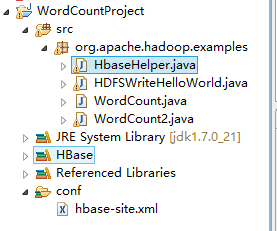

3.在网上看到的一种通过指定配置文件的调试方法:将自己所连接的HBase的配置文件hbase-site.xml添加到本工程的Classpath中,方法是:

先在工程下创建一个文件夹,可以命名为Conf,去Hbase的配置文件夹内将hbase-site.xml拷贝,粘贴至你所创建的Conf文件夹中。右击工程,Properties,Java Build Path, Libraries, Add Class Folder,勾选出Conf。

示图:

当然也可以通过硬编码的方式指定参数设置(方法见附录:一种Hbase操作的代码)。

实际运行中出现的问题:

执行代码无相应,只出现如下log信息:

13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:host.name=max-pc 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:java.version=1.7.0_21 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:java.home=C:\Program Files\Java\jdk1.7.0_21\jre 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:java.class.path=C:\Users\max\workspace\.metadata\.plugins\org.apache.hadoop.eclipse\hadoop-conf-1930663343015406040;C:\Users\max\workspace\WordCountProject\bin;E:\program\hbase\hbase-0.94.7.jar;E:\program\hbase\hbase-0.94.7-tests.jar;E:\program\hbase\lib\activation-1.1.jar;E:\program\hbase\lib\asm-3.1.jar;E:\program\hbase\lib\avro-1.5.3.jar;E:\program\hbase\lib\avro-ipc-1.5.3.jar;E:\program\hbase\lib\commons-beanutils-1.7.0.jar;E:\program\hbase\lib\commons-beanutils-core-1.8.0.jar;E:\program\hbase\lib\commons-cli-1.2.jar;E:\program\hbase\lib\commons-codec-1.4.jar;E:\program\hbase\lib\commons-collections-3.2.1.jar;E:\program\hbase\lib\commons-configuration-1.6.jar;E:\program\hbase\lib\commons-digester-1.8.jar;E:\program\hbase\lib\commons-el-1.0.jar;E:\program\hbase\lib\commons-httpclient-3.1.jar;E:\program\hbase\lib\commons-io-2.1.jar;E:\program\hbase\lib\commons-lang-2.5.jar;E:\program\hbase\lib\commons-logging-1.1.1.jar;E:\program\hbase\lib\commons-math-2.1.jar;E:\program\hbase\lib\commons-net-1.4.1.jar;E:\program\hbase\lib\core-3.1.1.jar;E:\program\hbase\lib\guava-11.0.2.jar;E:\program\hbase\lib\hadoop-core-1.0.4.jar;E:\program\hbase\lib\high-scale-lib-1.1.1.jar;E:\program\hbase\lib\httpclient-4.1.2.jar;E:\program\hbase\lib\httpcore-4.1.3.jar;E:\program\hbase\lib\jackson-core-asl-1.8.8.jar;E:\program\hbase\lib\jackson-jaxrs-1.8.8.jar;E:\program\hbase\lib\jackson-mapper-asl-1.8.8.jar;E:\program\hbase\lib\jackson-xc-1.8.8.jar;E:\program\hbase\lib\jamon-runtime-2.3.1.jar;E:\program\hbase\lib\jasper-compiler-5.5.23.jar;E:\program\hbase\lib\jasper-runtime-5.5.23.jar;E:\program\hbase\lib\jaxb-api-2.1.jar;E:\program\hbase\lib\jaxb-impl-2.2.3-1.jar;E:\program\hbase\lib\jersey-core-1.8.jar;E:\program\hbase\lib\jersey-json-1.8.jar;E:\program\hbase\lib\jersey-server-1.8.jar;E:\program\hbase\lib\jettison-1.1.jar;E:\program\hbase\lib\jetty-6.1.26.jar;E:\program\hbase\lib\jetty-util-6.1.26.jar;E:\program\hbase\lib\jruby-complete-1.6.5.jar;E:\program\hbase\lib\jsp-2.1-6.1.14.jar;E:\program\hbase\lib\jsp-api-2.1-6.1.14.jar;E:\program\hbase\lib\jsr305-1.3.9.jar;E:\program\hbase\lib\junit-4.10-HBASE-1.jar;E:\program\hbase\lib\libthrift-0.8.0.jar;E:\program\hbase\lib\log4j-1.2.16.jar;E:\program\hbase\lib\metrics-core-2.1.2.jar;E:\program\hbase\lib\netty-3.2.4.Final.jar;E:\program\hbase\lib\protobuf-java-2.4.0a.jar;E:\program\hbase\lib\servlet-api-2.5-6.1.14.jar;E:\program\hbase\lib\slf4j-api-1.4.3.jar;E:\program\hbase\lib\slf4j-log4j12-1.4.3.jar;E:\program\hbase\lib\snappy-java-1.0.3.2.jar;E:\program\hbase\lib\stax-api-1.0.1.jar;E:\program\hbase\lib\velocity-1.7.jar;E:\program\hbase\lib\xmlenc-0.52.jar;E:\program\hbase\lib\zookeeper-3.4.5.jar;E:\program\hadoop-1.0.0\lib\xmlenc-0.52.jar;E:\program\hadoop-1.0.0\lib\slf4j-log4j12-1.4.3.jar;E:\program\hadoop-1.0.0\lib\slf4j-api-1.4.3.jar;E:\program\hadoop-1.0.0\lib\servlet-api-2.5-20081211.jar;E:\program\hadoop-1.0.0\lib\oro-2.0.8.jar;E:\program\hadoop-1.0.0\lib\mockito-all-1.8.5.jar;E:\program\hadoop-1.0.0\lib\log4j-1.2.15.jar;E:\program\hadoop-1.0.0\lib\kfs-0.2.2.jar;E:\program\hadoop-1.0.0\lib\junit-4.5.jar;E:\program\hadoop-1.0.0\lib\jsch-0.1.42.jar;E:\program\hadoop-1.0.0\lib\jetty-util-6.1.26.jar;E:\program\hadoop-1.0.0\lib\jetty-6.1.26.jar;E:\program\hadoop-1.0.0\lib\jets3t-0.6.1.jar;E:\program\hadoop-1.0.0\lib\jersey-server-1.8.jar;E:\program\hadoop-1.0.0\lib\jersey-json-1.8.jar;E:\program\hadoop-1.0.0\lib\jersey-core-1.8.jar;E:\program\hadoop-1.0.0\lib\jdeb-0.8.jar;E:\program\hadoop-1.0.0\lib\jasper-runtime-5.5.12.jar;E:\program\hadoop-1.0.0\lib\jasper-compiler-5.5.12.jar;E:\program\hadoop-1.0.0\lib\jackson-mapper-asl-1.0.1.jar;E:\program\hadoop-1.0.0\lib\jackson-core-asl-1.0.1.jar;E:\program\hadoop-1.0.0\lib\hsqldb-1.8.0.10.jar;E:\program\hadoop-1.0.0\lib\hadoop-thriftfs-1.0.0.jar;E:\program\hadoop-1.0.0\lib\hadoop-fairscheduler-1.0.0.jar;E:\program\hadoop-1.0.0\lib\hadoop-capacity-scheduler-1.0.0.jar;E:\program\hadoop-1.0.0\lib\core-3.1.1.jar;E:\program\hadoop-1.0.0\lib\commons-net-1.4.1.jar;E:\program\hadoop-1.0.0\lib\commons-math-2.1.jar;E:\program\hadoop-1.0.0\lib\commons-logging-api-1.0.4.jar;E:\program\hadoop-1.0.0\lib\commons-logging-1.1.1.jar;E:\program\hadoop-1.0.0\lib\commons-lang-2.4.jar;E:\program\hadoop-1.0.0\lib\commons-httpclient-3.0.1.jar;E:\program\hadoop-1.0.0\lib\commons-el-1.0.jar;E:\program\hadoop-1.0.0\lib\commons-digester-1.8.jar;E:\program\hadoop-1.0.0\lib\commons-daemon-1.0.1.jar;E:\program\hadoop-1.0.0\lib\commons-configuration-1.6.jar;E:\program\hadoop-1.0.0\lib\commons-collections-3.2.1.jar;E:\program\hadoop-1.0.0\lib\commons-codec-1.4.jar;E:\program\hadoop-1.0.0\lib\commons-cli-1.2.jar;E:\program\hadoop-1.0.0\lib\commons-beanutils-core-1.8.0.jar;E:\program\hadoop-1.0.0\lib\commons-beanutils-1.7.0.jar;E:\program\hadoop-1.0.0\lib\aspectjtools-1.6.5.jar;E:\program\hadoop-1.0.0\lib\aspectjrt-1.6.5.jar;E:\program\hadoop-1.0.0\lib\asm-3.2.jar;E:\program\hadoop-1.0.0\hadoop-tools-1.0.0.jar;E:\program\hadoop-1.0.0\hadoop-core-1.0.0.jar;E:\program\hadoop-1.0.0\hadoop-ant-1.0.0.jar;C:\Users\max\workspace\WordCountProject\conf 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:java.library.path=C:\Program Files\Java\jdk1.7.0_21\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;C:\Program Files (x86)\Intel\iCLS Client\;C:\Program Files\Intel\iCLS Client\;C:\Program Files (x86)\NVIDIA Corporation\PhysX\Common;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;C:\Program Files (x86)\Intel\OpenCL SDK\2.0\bin\x86;C:\Program Files (x86)\Intel\OpenCL SDK\2.0\bin\x64;C:\Program Files\Intel\Intel(R) Management Engine Components\DAL;C:\Program Files\Intel\Intel(R) Management Engine Components\IPT;C:\Program Files (x86)\Intel\Intel(R) Management Engine Components\DAL;C:\Program Files (x86)\Intel\Intel(R) Management Engine Components\IPT;C:\Program Files\Intel\WiFi\bin\;C:\Program Files\Common Files\Intel\WirelessCommon\;C:\Program Files\TortoiseSVN\bin;C:\Program Files (x86)\Common Files\Thunder Network\KanKan\Codecs;C:\Program Files\Java\jdk1.7.0_21\bin;E:\program\apache-maven-3.0.5\\bin;C:\Program Files\Intel\WiFi\bin\;C:\Program Files\Common Files\Intel\WirelessCommon\;. 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=C:\Users\max\AppData\Local\Temp\ 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA> 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:os.name=Windows 8 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:os.version=6.2 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:user.name=hadoop 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:user.home=C:\Users\max 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Client environment:user.dir=C:\Users\max\workspace\WordCountProject 13/06/17 16:52:33 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=192.168.1.72:2181,192.168.1.71:2181,192.168.1.73:2181 sessionTimeout=180000 watcher=hconnection 13/06/17 16:52:33 INFO zookeeper.RecoverableZooKeeper: The identifier of this process is 8104@max-pc 13/06/17 16:52:36 INFO zookeeper.ClientCnxn: Opening socket connection to server 192.168.1.73/192.168.1.73:2181. Will not attempt to authenticate using SASL (unknown error) 13/06/17 16:52:36 INFO zookeeper.ClientCnxn: Socket connection established to 192.168.1.73/192.168.1.73:2181, initiating session 13/06/17 16:52:36 WARN zookeeper.ClientCnxnSocket: Connected to an old server; r-o mode will be unavailable 13/06/17 16:52:36 INFO zookeeper.ClientCnxn: Session establishment complete on server 192.168.1.73/192.168.1.73:2181, sessionid = 0x43f512f7ede0002, negotiated timeout = 40000 13/06/17 16:52:36 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=192.168.1.72:2181,192.168.1.71:2181,192.168.1.73:2181 sessionTimeout=180000 watcher=catalogtracker-on-org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation@34e0907c 13/06/17 16:52:36 INFO zookeeper.RecoverableZooKeeper: The identifier of this process is 8104@max-pc 13/06/17 16:52:36 INFO zookeeper.ClientCnxn: Opening socket connection to server 192.168.1.73/192.168.1.73:2181. Will not attempt to authenticate using SASL (unknown error) 13/06/17 16:52:36 INFO zookeeper.ClientCnxn: Socket connection established to 192.168.1.73/192.168.1.73:2181, initiating session 13/06/17 16:52:36 WARN zookeeper.ClientCnxnSocket: Connected to an old server; r-o mode will be unavailable 13/06/17 16:52:36 INFO zookeeper.ClientCnxn: Session establishment complete on server 192.168.1.73/192.168.1.73:2181, sessionid = 0x43f512f7ede0003, negotiated timeout = 40000

附录:一种Hbase操作的代码

/**

* @author MAX.HAN

* @version 1.0

* HbaseHelper.java Create on 2013-6-17 下午02:07:13

* Copyright (c) 2013 Company,Inc. All Rights Reserved.

*/

package org.apache.hadoop.examples;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.MasterNotRunningException;

import org.apache.hadoop.hbase.ZooKeeperConnectionException;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes;

/**

* Description:

*

* @author MAX.HAN

* @version 1.0

* Create on 2013-6-17 下午02:07:13

*/

public class HbaseHelper {

private static Configuration conf = null;

// 初始化配置

static {

Configuration HBASE_CONFIG = new Configuration();

//与hbase/conf/hbase-site.xml中hbase.zookeeper.quorum配置的值相同

HBASE_CONFIG.set("hbase.master", "192.168.1.70:60000");

HBASE_CONFIG.set("hbase.zookeeper.quorum", "192.168.1.71, 192.168.1.72,192.168.1.73");

//与hbase/conf/hbase-site.xml中hbase.zookeeper.property.clientPort配置的值相同

HBASE_CONFIG.set("hbase.zookeeper.property.clientPort", "2181");

conf = HBaseConfiguration.create(HBASE_CONFIG);

}

// 1、建表

public static void createTable(String tablename, String[] cfs)

throws IOException {

HBaseAdmin admin = new HBaseAdmin(conf);

if (admin.tableExists(tablename)) {

System.out.println("表已经存在!");

} else {

HTableDescriptor tableDesc = new HTableDescriptor(tablename);

for (int i = 0; i < cfs.length; i++) {

// 表建好后,列簇不能动态增加,而列是可以动态增加的,这是hbase伸缩性的一个体现。

tableDesc.addFamily(new HColumnDescriptor(cfs[i]));

}

admin.createTable(tableDesc);

System.out.println("表创建成功!");

}

}

// 2、插入数据

public static void writeRow(String tablename, String[] cfs) {

try {

HTable table = new HTable(conf, tablename);

Put put = new Put(Bytes.toBytes("rows1"));

for (int j = 0; j < cfs.length; j++) {

// 指定列簇// 指定列名// 指定列值

put.add(Bytes.toBytes(cfs[j]), Bytes.toBytes(String.valueOf("列1")), Bytes.toBytes("value_13"));

put.add(Bytes.toBytes(cfs[j]), Bytes.toBytes(String.valueOf("lie2")),Bytes.toBytes("value_24"));

table.put(put);

System.out.println("插入数据成功");

}

} catch (IOException e) {

e.printStackTrace();

}

}

// 3、删除一行数据

public static void deleteRow(String tablename, String rowkey)

throws IOException {

HTable table = new HTable(conf, tablename);

List list = new ArrayList();

Delete d1 = new Delete(rowkey.getBytes());

list.add(d1);

table.delete(list);

System.out.println("删除行成功!");

}

// 4、查找一行数据

public static void selectRow(String tablename, String rowKey)

throws IOException {

HTable table = new HTable(conf, tablename);

Get g = new Get(rowKey.getBytes());

Result rs = table.get(g);

for (KeyValue kv : rs.raw()) {

System.out.print(new String(kv.getRow()) + ">感谢各位的阅读!关于“Eclipse调试HBase程序需要注意什么”这篇文章就分享到这里了,希望以上内容可以对大家有一定的帮助,让大家可以学到更多知识,如果觉得文章不错,可以把它分享出去让更多的人看到吧!

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。